编程

通过阅读文章、观看视频以及参与最新的网络研讨会,深入了解嵌入式开发,并充分利用我们的产品提升您的嵌入式产品开发效率。

如何根据IEC 61508、ISO 26262、EN 50128和IEC 62304验证构建工具链?

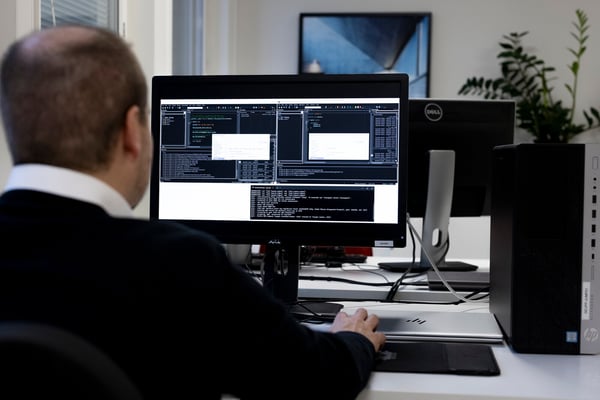

如何有效使用RISC-V的跟踪技术

在 IAR Embedded Workbench中进行ARM+RISC-V多核调试

在IAR Embedded Workbench for Arm中进行多核调试

如何在IAR Embedded Workbench中充分利用各种类型的断点

在IAR Embedded Workbench中实现堆栈保护来提高代码的安全性

掌控堆栈,确保系统稳定

在独立模式下进行C-RUN动态代码分析